Development of a 3D augmented map platform based on virtual walls for visibility constrained drone flight control

The main purpose of the proposed research is in developing core technologies for augmented

3D map construction and drone control using landmark-based virtual walls, applicable to privacy

protection and air traffic control. The objectives are two-folds: (1) Development of multi-sensor

fusion and feature extraction, privacy protection/air traffic control technique based on 3D augmented

map, and real-time user-drone interaction technique. (2) Software integration for real-worlds

applications.

|

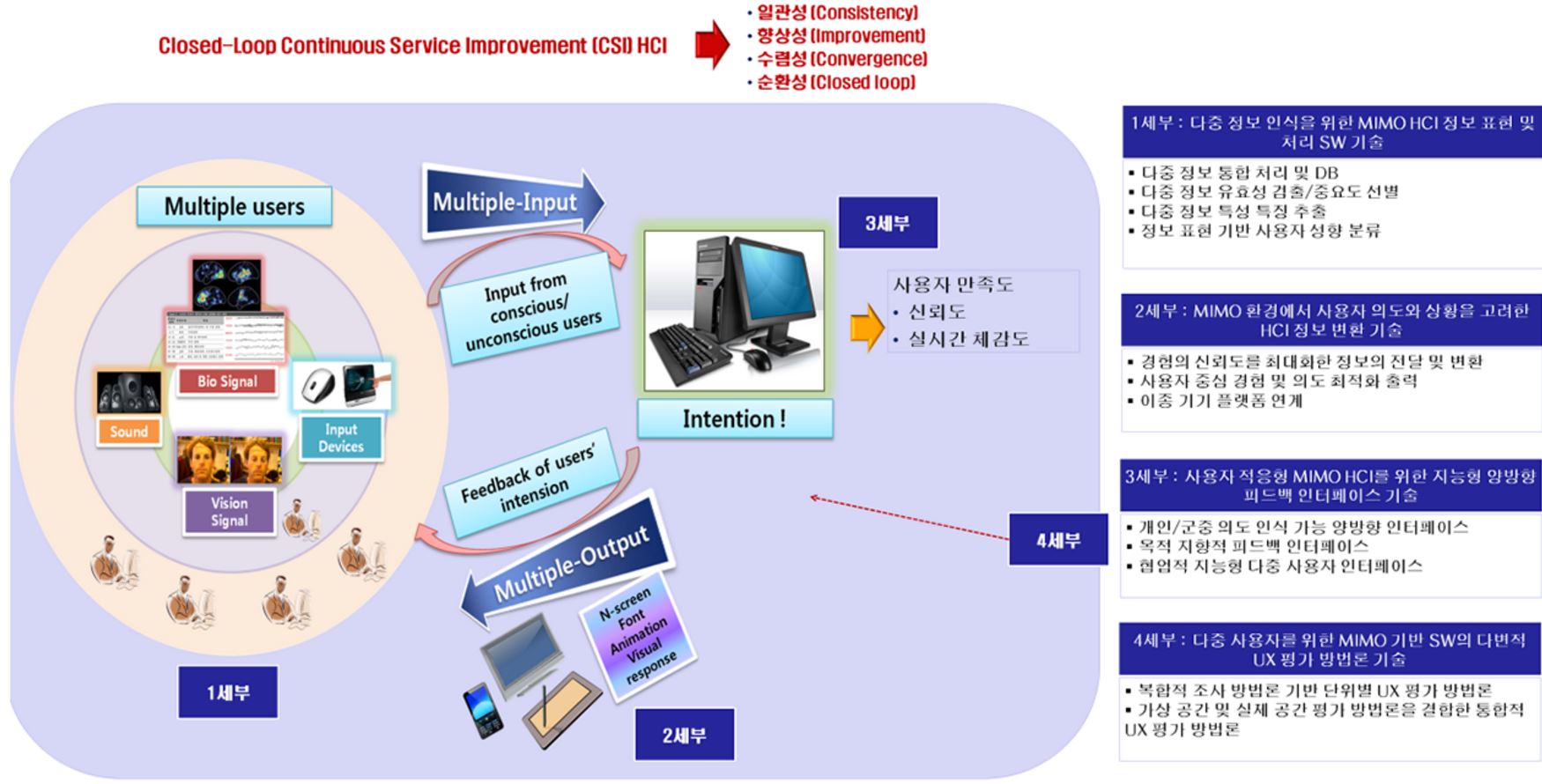

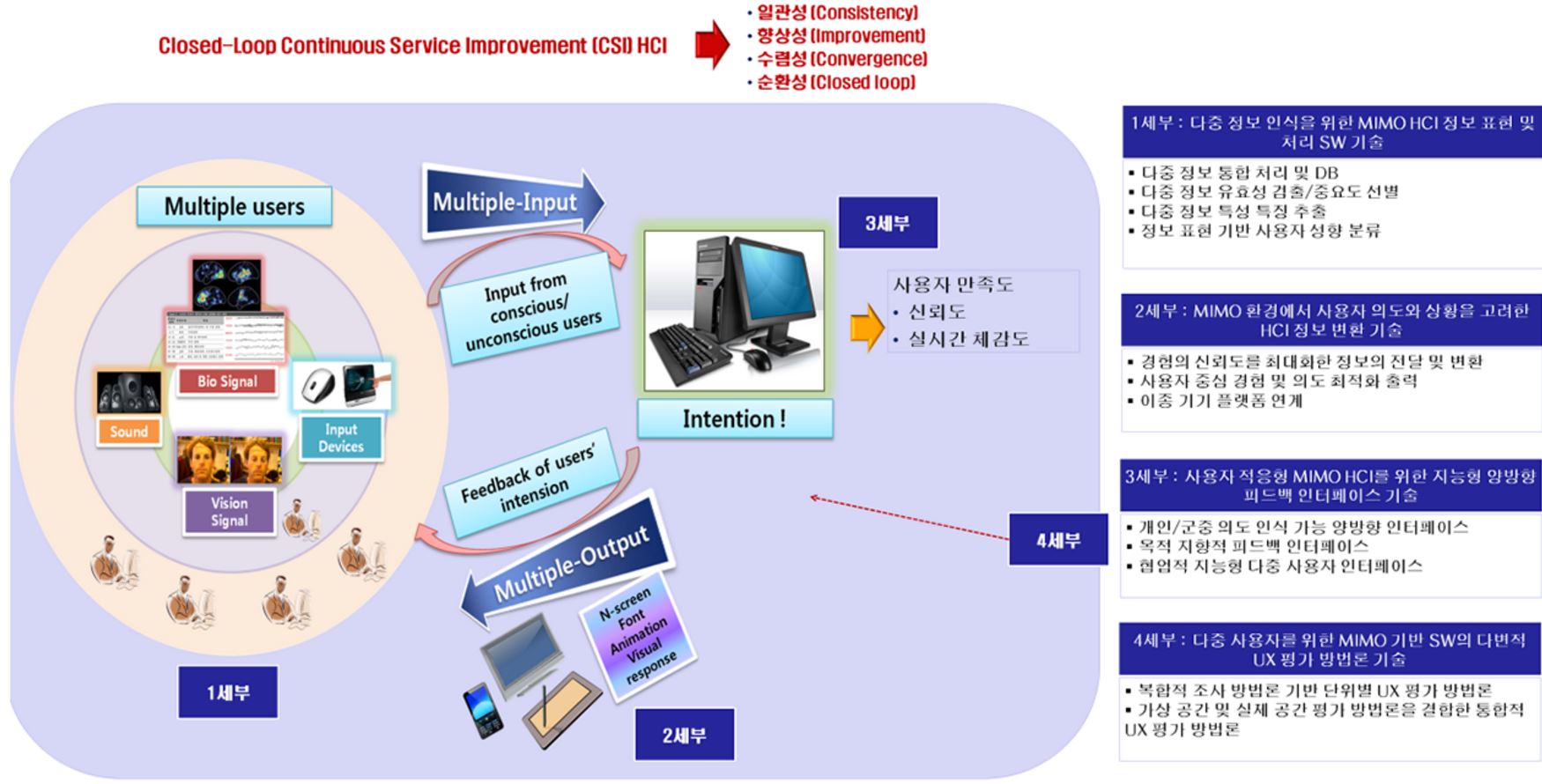

User-centric MIMO(multi-input & multi-output) Human-Computer Interface

Development of MIMO HCI SW Technology for Active Intentional Cognition and Response of Multiple Users.

The final research goals are: Development of core SW technology for novel MIMO HCI controlling multiple

computing devices and recognizing conscious/unconscious multiple users' intent.

Development of core SW for acquisition/processing/representation/transcoding/convergence of

multiple users' multiple information and information exchange among multiple computing devices.

|

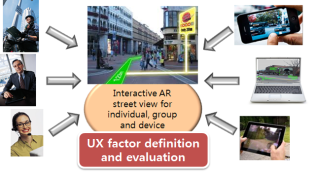

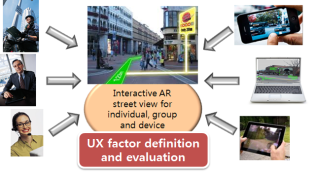

3D reconstruction technology development for scene of car accident using multi view black box image

Reconstruction/Re-augmentation Software Framework from Massive-scale Streetview Information

We introduce an integration framework based on information analysis,

hierarchical data representation and reconstruction techniques on massive-scale multimedia-spatial data.

Our research sub-goals include the followings: Hyper-perspective panoramic image construction technique,

object type data reconstruction, interactive re-augmentation technique, user-centric, device optimized data

representation technique, and large-scale multimedia data processing technique.

|

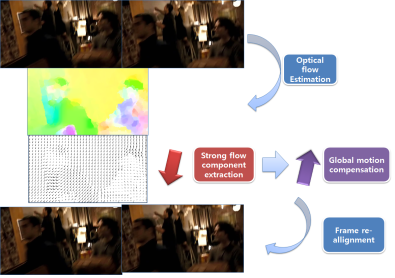

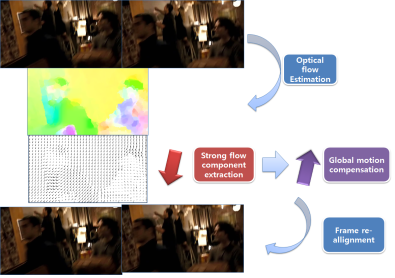

Content based video assessment based on color and optical flow pattern analyses

The main goal of the proposed research is in quantifying the factors that can be potentially harmful

for video observers during watching video streams from camera input, computer games, and video contents.

Previous work mainly focused on clinical trials about why and how people feel simulation sickness.

In this research, we propose a contents based analysis framework that can efficiently quantify the factors

about causing simulation sickness. In particular, we tackle the problem by analyzing color distribution and

optical flows in consecutive video frames.

|

Digital guardian for visually impaired people

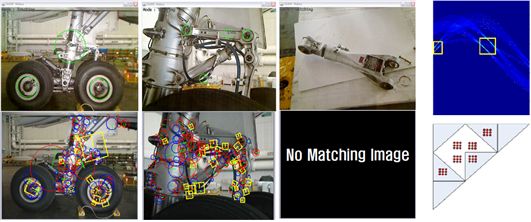

Augmented reality system for aircraft maintenance